Book & Author

Cathy O'Neil: Weapons of Math Destruction — How Big Data Increases Inequality and Threatens Democracy

By Dr Ahmed S. Khan

Chicago, IL

“In a system in which cheating is the norm, following the rules amounts to a handicap.”

“Justice cannot just be something that one part of society inflicts on the other.”

“Big Data processes codify the past. They do not invent the future.”

― Cathy O'Neil

In the present age of big data, algorithms affect and regulate the lives of people — how they get admitted to college, get loans from banks, buy health insurance — it is the machines not the humans which make the final decisions. In theory, machines are neutral and can make decisions without any bias. In Weapons of Math Destruction — How Big Data Increases Inequality and Threatens Democracy Cathy O’Neil exposes the dark side of big data — how mathematical models employed by various public and private entities remain unregulated — carry embedded biases and reinforce discriminations viz a viz giving advantage to the rich and punishing the poor, and ultimately undermining democracy.

Algorithms carry the intrinsic bias and hidden objectives of people who develop them — they are as good as people who manipulate them. Case studies in the book illustrate the author's thesis — big data increases inequality in society. In addition to an introduction and conclusion, the book has ten chapters: 1. Bomb Parts: What is a Model? 2. Shell Shocked: My Journey of Disillusionment 3. Arms Race: Going to College 4. Propaganda Machine: Online Advertising 5. Civilian Casualties: Justice in the Age of Big Data 6. Ineligible to Serve: Getting a Job 7. Sweating Bullets: On the Job 8. Collateral Damage: landing Credit 9. No Safe Zone: Getting Insurance, and 10. The Targeted Citizen: Civic Life.

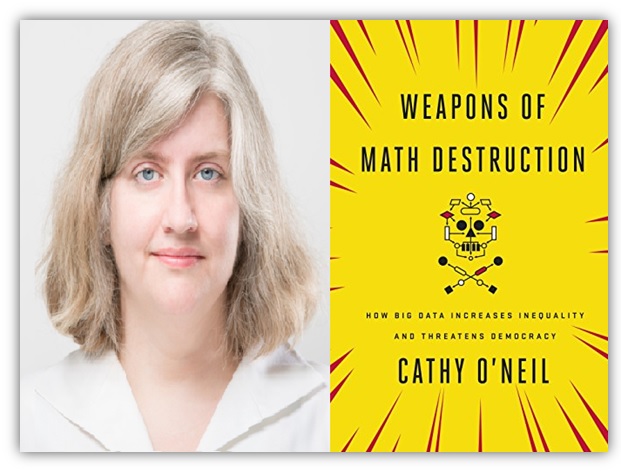

Cathy O’Neill is a prolific representative of academia and industry. She has a PhD in mathematics and background in finance and Big Data. She is a columnist for Bloomberg Opinion, and a well-known blogger. She has also expressed her views in the critically acclaimed documentary The Social Dilemma. She is founder of ORCAA — a consultancy providing algorithmic auditing services focused on safety, fairness and principled use of data —and has launched the Lede Program in Data Journalism at Columbia University. In her recent book The Shame Machine: Who Profits in the New Age of Humiliation, she explores how shame serves as a tool across a wide spectrum of sectors — government, the healthcare industry and the wellness domain.

In the introduction, the author commenting on her passion for math and its real-world implications and flaws, observes: “Math provided a neat refuge from the messiness of the real world. It marched forward, its field of knowledge expanding relentlessly, proof by proof. And I could add to it. Majored in math in college and went on to get my PhD. My thesis was on algebraic number theory, a field with roots in all that we performed on numbers translated into trillions of dollars sloshing from one account to another. At first I was excited and amazed by working in this new laboratory, the global economy. But in the autumn of 2008, after I'd been there for a bit more than a year, it came crashing down. The crash made it all too clear that mathematics, once my refuge, was not only deeply entangled in the world's problems but also fueling many of them. The housing crisis, the collapse of major financial institutions, the rise of unemployment—all had been aided and abetted by mathematicians wielding magic formulas. What's more, thanks to the extraordinary powers that I loved so much, math was able to combine with technology to multiply the chaos and misfortune, adding efficiency and scale to systems that I now recognized as flawed.”

Discussing the missed opportunity to rectify the misuse of math, the author notes: “If we had been clear-headed, we all would have taken a step back at this point to figure out how math had been misused and how we could prevent a similar catastrophe in the future. But instead, in the wake of the crisis, new mathematical techniques were hotter than ever, and expanding into still more domains. They churned 24/7 through petabytes of information, much of it scraped from social media or e-commerce websites. And increasingly they focused not on the movements of global financial markets but on human beings, on us. Mathematicians and statisticians were studying our desires, movements, and spending power. They were predicting our trustworthiness and calculating our potential as students, workers, lovers, criminals.”

Reflecting on Big Data’s positive and negative impact on the economy the author observes: “A computer program could speed through thousands of resumes or loan applications in a second or two and sort them into neat lists, with the most promising candidates on top of it... By 2010…mathematics was asserting itself as never before in human affairs…and the public largely welcomed it. Yet I saw trouble. The math-powered applications powering the data economy were based on choices made by fallible human beings. Some of these choices were no doubt made with the best intentions. Nevertheless, many of these models encoded human prejudice, misunderstanding, and bias into the software systems that increasingly managed our lives. Like gods, these mathematical models were opaque, their workings invisible to all but the highest priests in their domain: mathematicians and computer scientists. Their verdicts, even when wrong or harmful, were beyond dispute or appeal. And they tended to punish the poor and the oppressed in our society, while making the rich richer. I came up with a name for these harmful kinds of models: Weapons of Math Destruction or WMDs for short.”

Explaining the modus operandi of Big Data companies, the author states: “At Big Data companies like Google… researchers run constant tests and monitor thousands of variables. They can change the font on a single advertisement from blue to red, serve each version to ten million people, and keep track of which one gets more clicks. They use this feedback to hone their algorithms and fine-tune their operation.”

Reflecting on the limitations of models, the author states: “Ill-conceived mathematical models now micromanage the economy, from advertising to prisons. These WMDs have many of the same characteristics as the value-added model that derailed Sarah Wysocki's career in Washington's public schools. They're opaque, unquestioned, and unaccountable, and they operate at a scale to sort, target, or ‘optimize’ millions of people. By confusing their findings with on-the-ground reality, most of them create pernicious WMD feedback loops.”

Discussing the dilemma of using selective data to create models, the author observes: “Predictive models are, increasingly, the tools we will be relying on to run our institutions, deploy our resources, and manage our lives. But…these models are constructed not just from data but from the choices we make about which data to pay attention to — and which to leave out. Those choices are not just about logistics, profits, and efficiency. They are fundamentally moral.”

Expounding on the issue of auditing algorithms, the author notes: “As scientists, we should offer statistically meaningful and comprehensive approaches to the question of auditing an algorithm. As citizens, we must thoroughly vet those audits for relevance and clarity. This is a public conversation that's already begun, but I'd like to make a modest suggestion. Let's reframe the question of fairness: instead of fighting over which single metric we should use to determine the fairness of an algorithm, we should instead try to identify the stakeholders and weigh their relative harms. In the case of the recidivism risk algorithm, we'd need to compare the harm of a false positive—someone who is falsely given a high-risk score and unjustly imprisoned—against the harm of a false negative—someone who is falsely let off the hook and might commit a crime.”

Discussing data quality, the author notes: “When data scientists talk about ‘data quality,’ we're usually referring to the amount of cleanliness of the data—is there enough to train an algorithm? Are the numbers representing what we expect or are they random? But in this case we have no data quality issue; the data is available, and in fact it's plentiful. It's just wrong. And yet it is framed as if it's reliable, with Google Home and Alexa advertising their in-home services as if they're extensions of our brains, more convenient and dependable than kitchen dictionaries. We should demand truth in advertising before we have more pizzagates and church assassins. It's not just conspiracy minded folks that get fooled, though. The technical community is to blame as well, and we have work to do. We need to ensure that data effectively and comprehensively represents the world—even, we hope, bears witness to the world and its suffering, rather than shaping the world—especially in ways that exacerbate misery.”

Reflecting on the implications of big corporation’s total control over data the author observes: “When statistics itself, and the public's trust in statistics, is being actively undermined by politicians across the globe, how can we possibly expect the Big Data industry to clarify rather than contribute to the noise? We can because we must. Right now, mammoth companies like Google, Amazon, and Facebook exert incredible control over society because they control the data. They reap enormous profits while somehow offloading fact-checking responsibilities to others. It's not a coincidence that, even as he works to undermine the public's trust in science and in scientific fact, Steve Bannon sits on the board of Cambridge Analytica, a political data company that has claimed credit for Trump's victory while bragging about secret ‘voter suppression’ campaigns.”

The author concludes the book by advocating accountability for the algorithms: “Algorithms are only going to become more ubiquitous in the coming years. We must demand that systems that hold algorithms accountable become ubiquitous as well. Let's start building a framework now to hold algorithms accountable for the long term. Let's base it on evidence that the algorithms are legal, fair, and grounded in fact. And let's keep evolving what those things mean, depending on the context. It will be a group effort, and we'll need as many lawyers and philosophers as engineers, but we can do it if we focus our efforts. We can’t afford to do otherwise.”

In Weapons of Math Destruction Cathy O’Neill explores the dark side of big data —power and risks, the intended and unintended consequences of the mathematical models. The author offers unparalleled insight and analysis about the challenges that lie ahead — in personal, social, and business domains — in an increasingly algorithmic world. It is an interesting read for all those who want to understand the dark side of technology. The book can be used as a reference in academic programs dealing with Science, Technology and Society (STS).