Book & Author

Kissinger, Schmidt and Huttenlocher: The Age of AI — And Our Human Future

By Dr Ahmed S. Khan

Chicago, IL

Emerging Technologies of the 4th Industrial Revolution (4IR) are quietly and dramatically changing society; the way we interact with others, live, work, and educate our students. Such changes are enabled by emerging technologies like Artificial Intelligence (AI), Big Data, Internet of Things (IoT), Augmented Reality, Blockchain, Robotics, Drones, Nanotechnologies, Genomics and Gene Editing, Quantum Computing, and Smart Manufacturing. The fusion of these technologies is impacting all sectors across the globe at unprecedented speed and the time to remake the world is getting shorter (years) in contrast to previous industrial revolutions — 1IR: Steam- and water-powered mechanization (centuries), 2IR: mass production and electrical power (multiple decades), and 3IR: Electronics and IT (decades).

Among emerging technologies, Artificial Intelligence (AI) is becoming the most transformative technology in the history of humankind. The stakeholders, policy shapers, and decision makers of the present and future need to be educated about the social and ethical implications, and the intended and unintended consequences of AI, so that they can guide society to its appropriate applications, alert society to its failures, and provide a vision to society in helping to solve challenges and issues in a wise and humane manner.

Recent popularity of OpenAI’s chatbot ChatGPT-4 has led to a rush of commercial investment in new generative AI tools— trained on large pools of data — that can produce human-like text, exquisite images, melodic music and reliable computer code. However, ethical and societal concerns have been expressed regarding biased composition of algorithms which can generate false outputs and promote discriminating outcomes.

There have been calls for disclosure laws to force AI providers to open up their systems for third-party scrutiny. In the EU, the proposed legislation— the Artificial Intelligence (AI) Act— is aimed to strengthen rules around data quality, transparency, human oversight and accountability. In the United States, the Biden Administration in February 2023 via an executive order directed federal agencies to root out bias in their design and use of new technologies, including AI, and to protect the American public from algorithmic discrimination and AI-related harm. In May 2023, the Biden administration announced a new initiative that focuses on three pathways: 1. New investments to power responsible American AI research and development (R&D); 2. Public assessments of existing generative AI systems; and 3. Policies to ensure the US government is leading by example on mitigating AI risks and harnessing AI opportunities.

In The Age of AI — And Our Human Future, Kissinger, Schmidt and Huttenlocher — Three deep and accomplished thinkers — come together to explain how Artificial Intelligence (Al) is transforming human society viz a viz security, economics, order, reality and knowledge, and discuss its risks and benefits. In seven chapters — 1. We Are, 2. How We Got Here: Technology and Human Thought, 3. From Turing to Today—and Beyond, 4. Global Network Platforms, 5. Security and World Order, 6. AI and Human Identity, and 7. AI and the Future —the book answers the key questions: What do AI-enabled innovations in health, biology, space, and quantum physics look like? What do AI-enabled "best friends" look like, especially to children? What does AI-enabled war look like? Does AI perceive aspects of reality humans do not? When AI participates in assessing and shaping human action, how will humans change? And What, then, will it mean to be human?

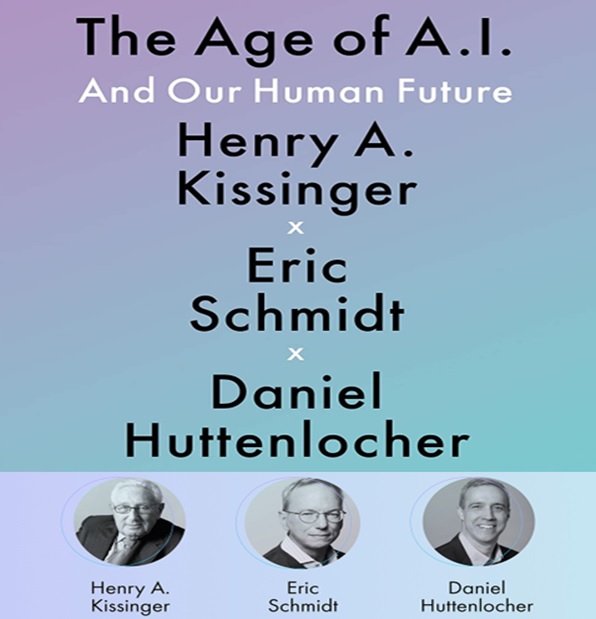

Henry A. Kissinger, recipient of the Nobel peace prize (1973), served as the 56th Secretary of State (1973-1977), and the Assistant to the President for National Security Affairs (1969-1975). Presently, he is chairman of his consulting firm, Kissinger Associates. Henry Kissinger is a prolific writer, his major works include A World Restored: Metternich, Castlereagh and the Problems of Peace, 1812,-22, Nuclear Weapons and Foreign Policy, The Necessity for Choice: Prospects of American Foreign Policy, White House Years, Years of Upheaval, Diplomacy, Years of Renewal, Does America Need a Foreign Policy? Toward a Diplomacy for the 21st Century, Ending the Vietnam War: A History of America's Involvement in and Extrication from the Vietnam War, Crisis: The Anatomy of Two Major Foreign Policy Crises, On China, and World Order.

Eric Schmidt, a technologist, entrepreneur, and philanthropist, joined Google (2001) and helped it become a global technological leader. He is the author of Trillion Dollar Coach: The Leadership Playbook of Silicon Valley's Bill Campbell, How Google Works, The New Digital Age: Transforming Nations, Businesses, and Our Lives.

Daniel Huttenlocher is the inaugural dean of the MIT Schwarzman College of Computing. His academic and industrial experience includes computer science faculty member at Cornell and MIT, researcher and manager at the Xerox Palo Alto Research Center (PARC), and CTO of a fintech start-up. Currently, he serves as the chair of the board of the John D. and Catherine T. MacArthur Foundation, and as a member of the boards of Amazon and Corning.

In the preface, explaining the rapid pace of AI’s evolution, the authors observe: “Every day, everywhere, AI is gaining popularity. An increasing number of students are specializing in it, preparing for careers in or adjacent to it. In 2020, American AI start-ups raised almost $38 billion in funding. Their Asian counterparts raised $25 billion, and their European counterparts raised $8 billion. Three governments, the United States, China, and the European Union—have all convened high-Level commissions to study AI and report their findings. Now political and corporate leaders routinely announce their goals to ‘win’ in AI or, at the very least, to adopt AI and tailor it to meet their objectives. Each of these facts is a piece of the picture. In isolation, however, they can be misleading. AI is not an industry, let alone a single product. In strategic parlance, it is not a ‘domain.’ It is an enabler of many industries and facets of human life: scientific research, education, manufacturing, logistics, transportation, defense, law enforcement, politics, advertising, art, culture, and more. The characteristics of AI—including its capacities to learn, evolve, and surprise—will disrupt and transform them all. The outcome will be the alteration of human identity and the human experience of reality at levels not experienced since the dawn of the modern age.”

Describing the objective of the book, and how AI accesses reality, the authors state: “The technology is changing human thought, knowledge, perception, and reality—and, in so doing, changing the course of human history…This book is about a class of technology that augurs a revolution in human affairs. AI—machines that can perform tasks that require human-level intelligence—has rapidly become a reality. Machine learning, the process the technology undergoes to acquire knowledge and capability—often in significantly briefer time frames than human learning processes require—has been continually expanding into applications in medicine, environmental protection, transportation, law enforcement, defense, and other fields. Computer scientists and engineers have developed technologies, particularly machine-learning methods using ‘deep neural networks,’ capable of producing insights and innovations that have long eluded human thinkers and of generating text, images, and video that appear to have been created by humans. AI, powered by new algorithms and increasingly plentiful and inexpensive computing power, is becoming ubiquitous. Accordingly, humanity is developing a new and exceedingly powerful mechanism for exploring and organizing reality—one that remains, in many respects, inscrutable to us. AI accesses reality differently from the way humans access it.”

Reflecting on the roles in reviewing information— shrinking for humans and expanding for AI — and derived judgments, the authors note: “At every turn, humanity will have three primary options: confining AI, partnering with it, or deferring to it. These choices will define AI's application to specific tasks or domains, reflecting philosophical as well as practical dimensions. For example, in airline and automotive emergencies, should an AI copilot defer to a human? Or the other way around? For each application, humans will have to chart a course; in some cases, the course will evolve, as AI capabilities and human protocols for testing AI's results also evolve. Sometimes deference will be appropriate—if an AI can spot breast cancer in a mammogram earlier and more accurately than a human can, then employing it will save lives. Sometimes partnership will be best, as in self-driving vehicles that function as today's airplane autopilots do. At other times, though— as in military contexts— strict, well-defined, well-understood limitations will be critical. AI will transform our approach to what we know, how we know, and even what is knowable. The modern era has valued knowledge that human minds obtain through the collection and examination of data and the deduction of insights through observations.”

Describing the scope of human-machine partnership, the authors state: “In this era, the ideal type of truth has been the singular, verifiable proposition provable through testing. But the AI era will elevate a concept of knowledge that is the result of partnership between humans and machines. Together, we (humans) will create and run (computer) algorithms that will examine more data more quickly, more systematically, and with a different logic than any human mind can. Sometimes, the result will be the revelation of properties of the world that were beyond our conception—until we cooperated with machines. AI already transcends human perception—in a sense, through chronological compression or ‘time travel’: enabled by algorithms and computing power, it analyzes and learns through processes that would take human minds decades or even centuries to complete.”

Reflecting on the technology policy and intellectual capital of nations, the authors observe: “Historic global powers such as France and Germany have prized independence and the freedom to maneuver in their technology policy. However, peripheral European states with recent and direct experience of foreign threats —such as post-Soviet Baltic and Central European states —have shown greater readiness to identify with a US-led ‘technosphere.’ India, while still an emerging force in this arena, has substantial intellectual capital, a relatively innovation-friendly business and academic environment, and a vast reserve of technology and engineering talent that could support the creation of leading network platforms.”

Discussing AI’s role in escalating a military conflict between nations, the authors observe: “AI increases the inherent risk of preemption and premature use escalating into conflict. A country fearing that its adversary is developing automatic capabilities may seek to preempt it: if the attack ‘succeeds,’ there may be no way to know whether it was justified. To prevent unintended escalation, major powers should pursue their competition within a framework of verifiable limits. Negotiation should not only focus on moderating an arms race but also making sure that both sides know, in general terms, what the other is doing. But both sides must expect (and plan accordingly) that the other will withhold its most security-sensitive secrets. There will never be complete trust. But as nuclear arms negotiations during the Cold War demonstrated, that does not mean that no measure of understanding can be achieved…Defining the nature and manner of restraint on AI-enabled weapons, and ensuring restraint is mutual, will be critical...As AI weapons make vast new categories of activities possible, or render old forms of activities newly potent, the nations of the world must make urgent decisions regarding what is compatible with concepts of inherent human dignity and moral agency.”

Explaining the impact of AI on the security and world order, the authors observe: “Due to the dual-use character of most AI technologies, we have a duty to our society to remain at the forefront of research and development. But this will equally oblige us to understand the limits. If a crisis comes, it will be too late to begin discussing these issues. Once employed in a military conflict, the technology's speed all but ensures that it will impose results at a pace faster than diplomacy can unfold. A discussion of cyber and AI weapons among major powers must be undertaken, if only to develop a common vocabulary of strategic concepts and some sense of one another's red lines. The will to achieve mutual restraint on the most destructive capabilities must not wait for tragedy to arise. As humanity sets out to compete in the creation of new, evolving, and intelligent weapons, history will not forgive a failure to attempt to set limits. In the era of artificial intelligence, the enduring quest for national advantage must be informed by an ethic of human preservation.”

Expounding on the need to develop protocols for ethical use of AI — in the current unregulated and unmonitored environment — the authors state: “If humanity is to shape the future, it needs to agree on common principles that guide each choice. Collective action will be hard, and at times impossible, to achieve, but individual actions, with no common ethic to guide them, will only magnify instability. Those who design, train, and partner with AI will be able to achieve objectives on a scale and level of complexity that, until now, have eluded humanity—new scientific breakthroughs, new economic efficiencies, new forms of security, and new dimensions of social monitoring and control.”

The authors advocate forming a group composed of respected figures from the highest levels of government, business, and academia, to achieve two functions: “1. Nationally, it should ensure that the country remains intellectually and strategically competitive in AI, 2. Both nationally and globally, it should study, and raise awareness of, the cultural implications Al produces.” The authors further state: “Technology, strategy, and philosophy need to be brought into some alignment, lest one outstrip the others. What about traditional society should we guard? And what about traditional society should we risk in order to achieve a superior one? How can AI's emergent qualities be integrated into traditional concepts of societal norms and international equilibrium? What other questions should we seek to answer when, for the situation in which we find ourselves, we have no experience or intuition?”

The authors conclude the book by observing: “The advent of AI, with its capacity to learn and process information in ways that human reason alone cannot, may yield progress on questions that have proven beyond our capacity to answer…Human intelligence and artificial intelligence are meeting, being applied to pursuits on national, continental, and even global scales. Understanding this transition, and developing guiding ethic for it, will require commitment and insight from many elements of society: scientists and strategists, statesmen and philosophers, clerics and CEOs. This commitment must be made within nations and among them. Now is the time to define both our partnership with artificial intelligence and the reality that will result.”

The book covers a wide spectrum of AI issues — social and ethical implications, intended and unintended consequences, and the need for developing regulations. The bottom line is that machines are subject to human design — human biases and programmed prejudices can be intrinsically transferred to algorithms. Indeed, transparency and scrutiny are required to create neutral algorithms. But looking at the big picture— as a supplementary observation to the theme of the book — a heartless and spiritless machine devoid of love, compassion, humanity and wisdom can never become equal to humans and exhibit total control over them! No matter how smart models and chatbots get and develop cloning ability, they can never outsmart their creators — the humans! The ultimate decision makers! The biggest threat to humans is not posed by intelligent machines — but rather by their unethical applications by humans themselves!

The Age of AI — And Our Human Future by Kissinger, Schmidt and Huttenlocher, is essential reading for general readers, students, educators and policy makers. The book can be used as a reference for academic programs in history of technology, science technology and society (STS), ethics, business and public policy.

[Dr Ahmed S. Khan ( dr.a.s.khan@ieee.org ) is a Fulbright Specialist Scholar. Professor Khan has over 40 years of experience in Higher Education. He has authored several technical and non-technical books, including Nanotechnology: Ethical and Social Implications, and a series on Science, Technology & Society (STS).]